Moving from Next Token Prediction to The Sculpted Geometry of Language

Why next token prediction is such a shallow and uninteresting frame

The fixation on next token prediction by both detractors and proponents of LLMs strikes me as odd. Some dismissively say, "It's just next token prediction," while proponents say, "Isn't it amazing that next token prediction works so well?!" But both perspectives, to me, seem to miss a deeper point.

After all, next token prediction is simply the chain rule of probability in action, a way to compute a joint probability function. So, its effectiveness in generating text shouldn't be surprising, assuming we’ve obtained such a function. Factoring a joint probability distribution over (sub)strings into a series of conditional probabilities will, of course, enable the construction of sentences.

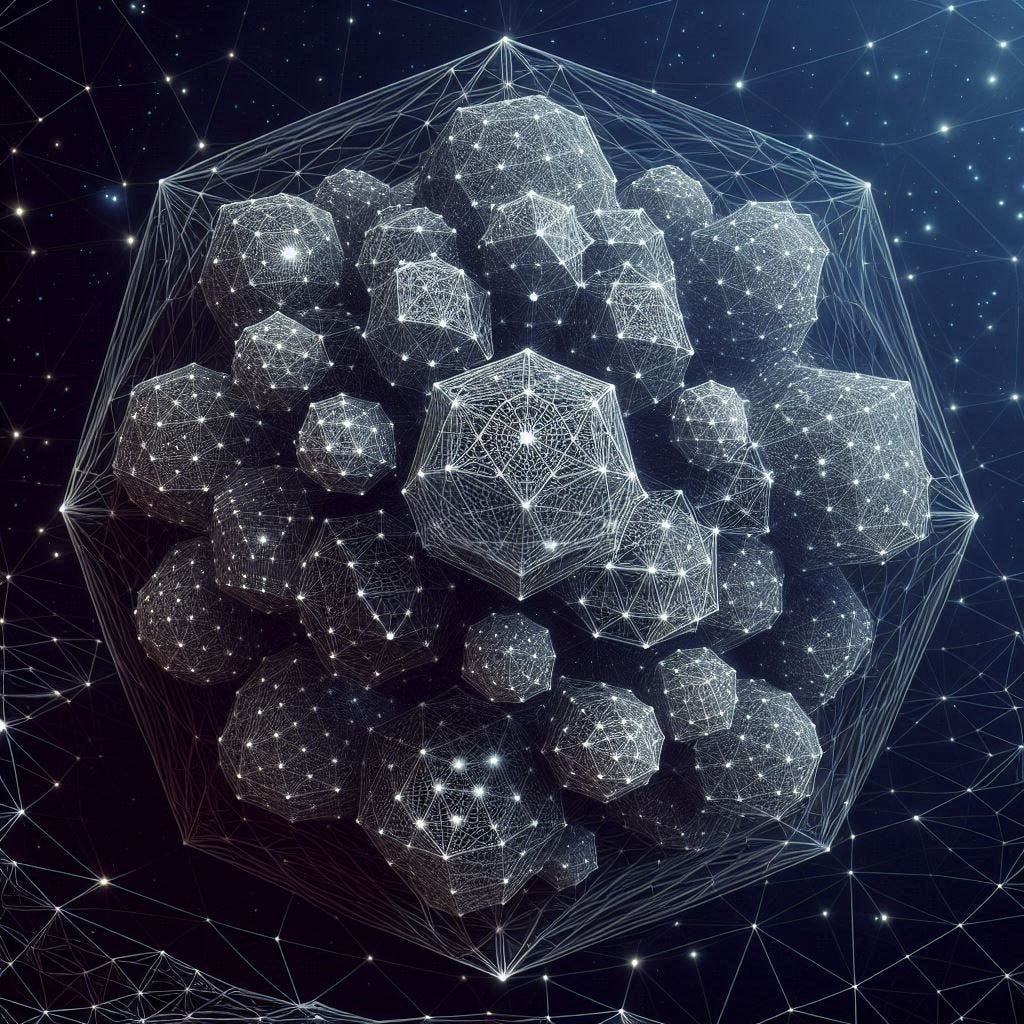

What I find truly amazing, however, is not the concept of next token prediction but the sculpting process with cross entropy. Starting with a parameterization in terms of linear piecewise-defined functions, and using a cross-entropy loss, we sculpt our way into a geometric construction that can talk back to us. This is amazing to me because it's not immediately obvious that this process should yield such impressive results. It is at the very least, a partial vindication of the idea that compression is intimately related to intelligence. We're using cross entropy as a proxy to minimize the divergence between the data distribution of language tokens and our current approximating function (which, intriguingly, given their linear piecewise-defined nature, these functions are like hyperdimensional versions of the 3D meshes we use in video games). The fact that this relatively simple approach works so well is what's truly amazing.

The function definition is basic, the cross-entropy operation is straightforward, and the computation of updates is a mechanical process. Yet, from this simplicity emerges surprising complexity. The emphasis on next token prediction, whether to marvel at or dismiss it, completely overlooks what's truly remarkable.